Metadata

- Author: Jay Alammar

- Full Title: The Illustrated DeepSeek-R1

- URL: https://newsletter.languagemodels.co/p/the-illustrated-deepseek-r1?triedRedirect=true

Highlights

- How LLMs are trained

Just like most existing LLMs, DeepSeek-R1 generates one token at a time, except it excels at solving math and reasoning problems because it is able to spend more time processing a problem through the process of generating thinking tokens that explain its chain of thought.

(View Highlight)

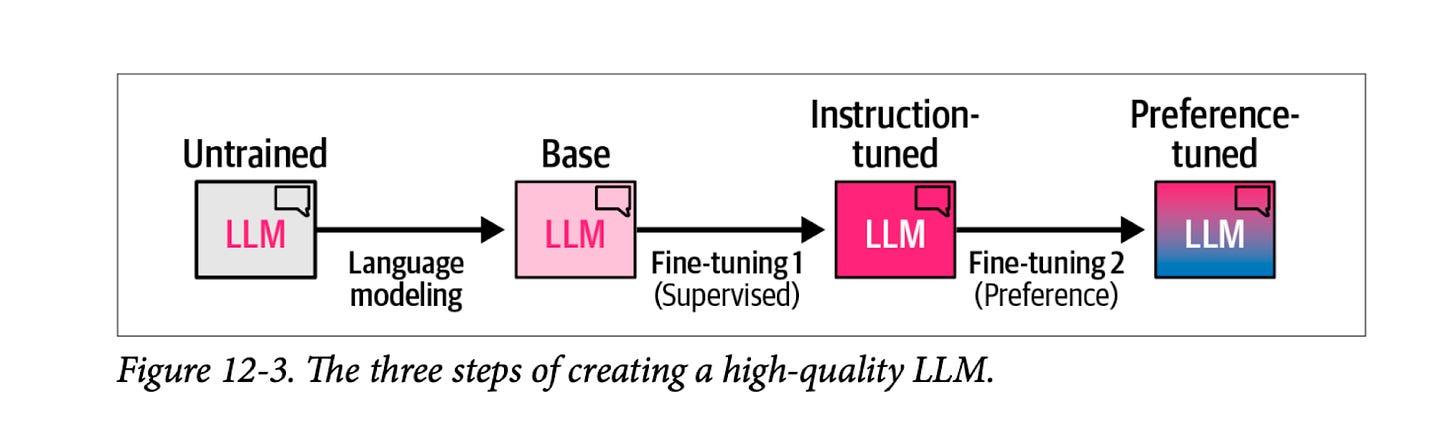

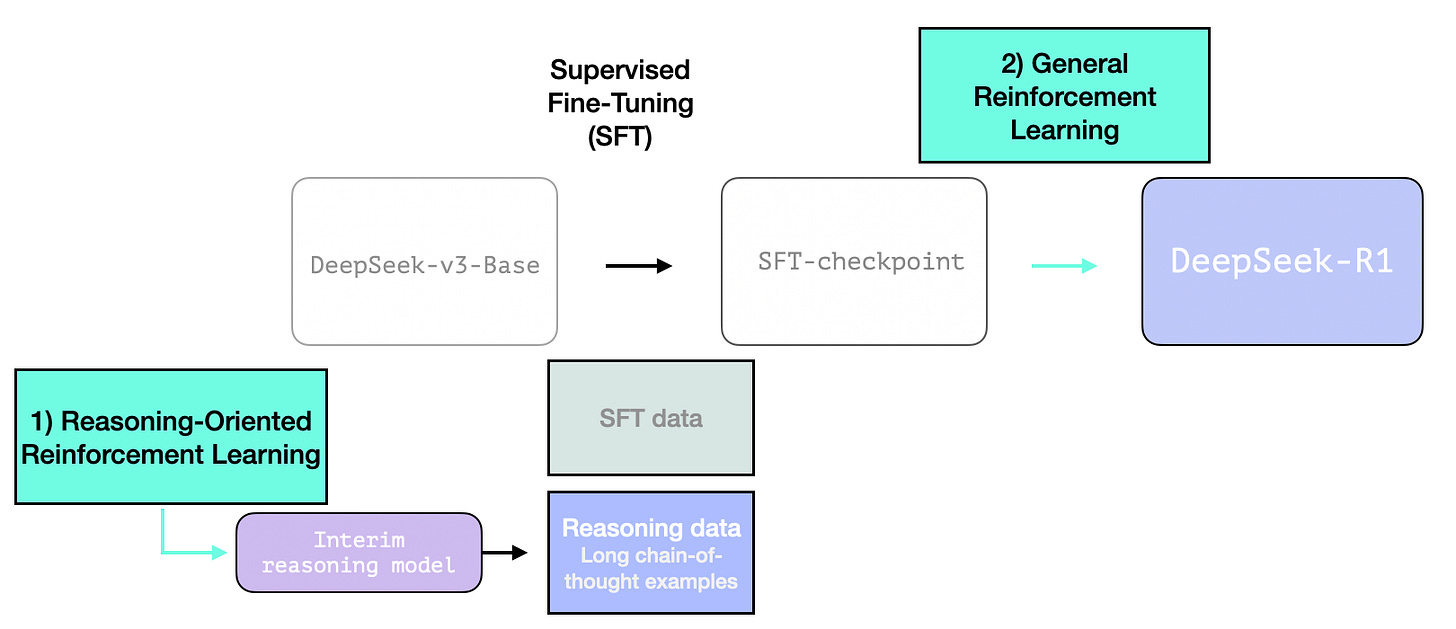

(View Highlight) - the general recipe of creating a high-quality LLM over three steps:

- The language modeling step where we train the model to predict the next word using a massive amount of web data. This step results in a base model.

- a supervised fine-tuning step that makes the model more useful in following instructions and answering questions. This step results in an instruction tuned model or a supervised fine -tuning / SFT model.

- and finally a preference tuning step which further polishes its behaviors and aligns to human preferences, resulting in the final preference-tuned LLM which you interact with on playgrounds and apps. (View Highlight)

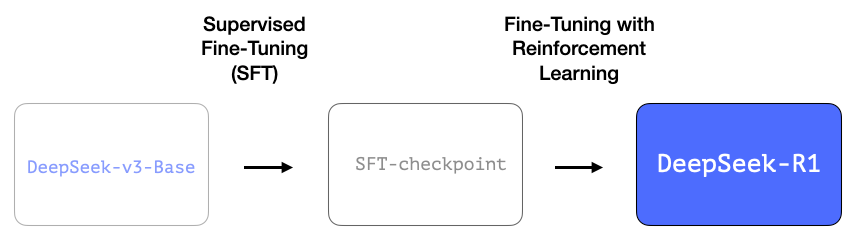

- DeepSeek-R1 follows this general recipe. The details of that first step come from a previous paper for the DeepSeek-V3 model. R1 uses the base model (not the final DeepSeek-v3 model) from that previous paper, and still goes through an SFT and preference tuning steps, but the details of how it does them are what’s different.

(View Highlight)

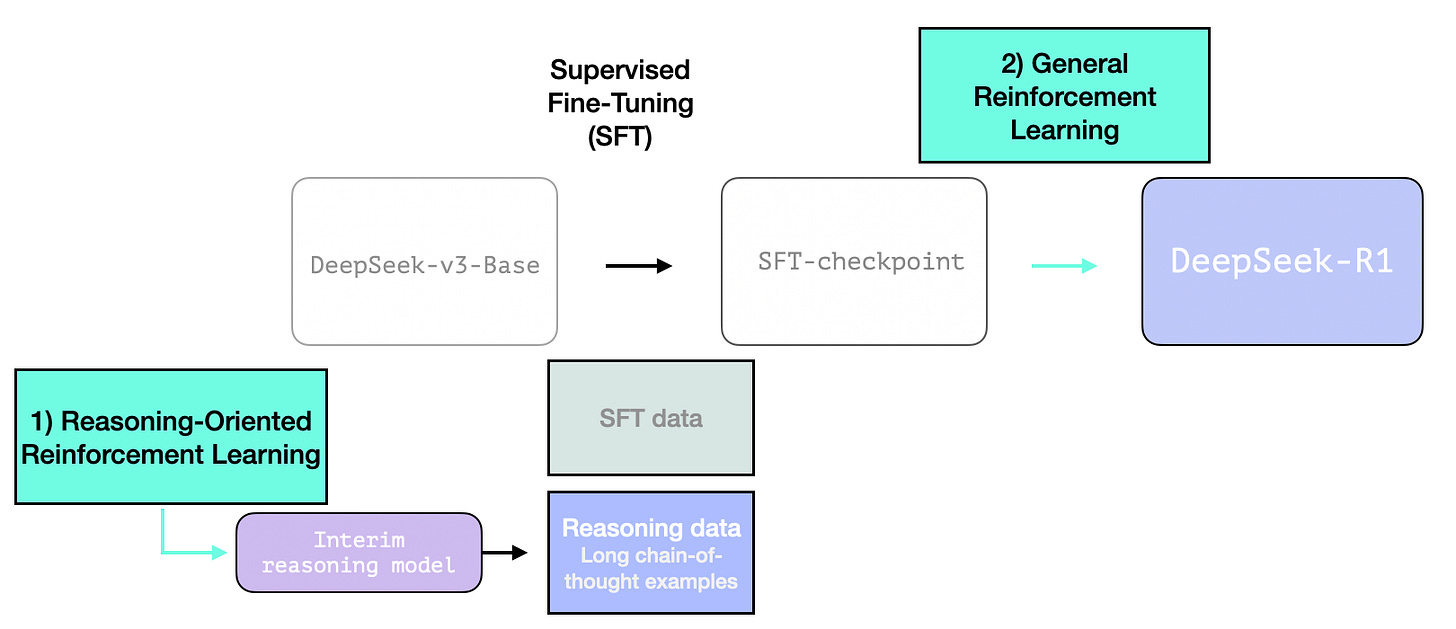

(View Highlight) - There are three special things to highlight in the R1 creation process.

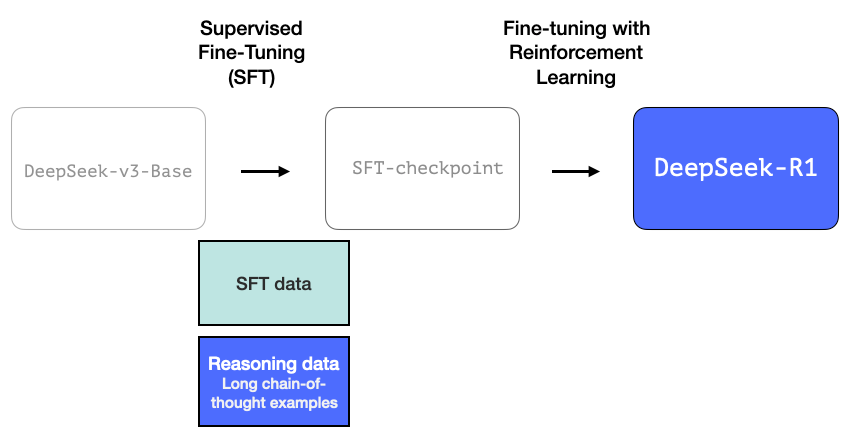

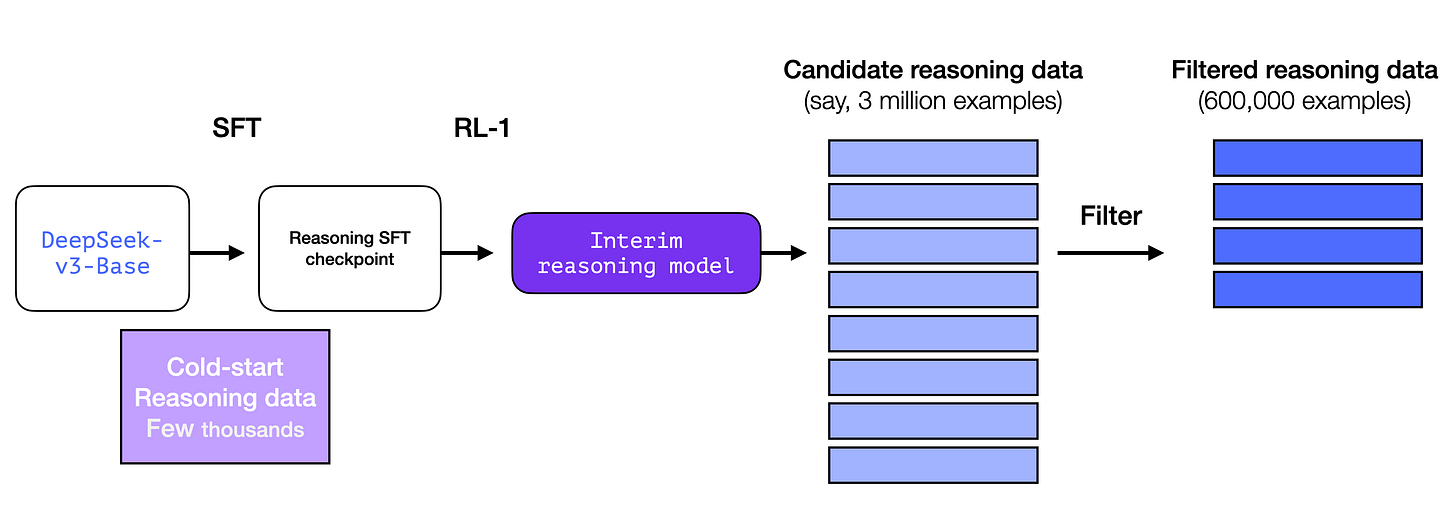

1- Long chains of reasoning SFT Data

This is a large number of long chain-of-thought reasoning examples (600,000 of them). These are very hard to come by and very expensive to label with humans at this scale. Which is why the process to create them is the second special thing to highlight (View Highlight)

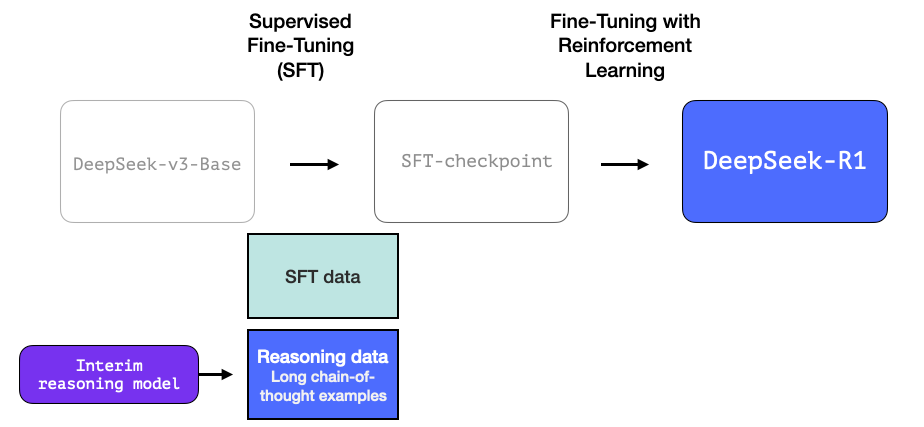

This is a large number of long chain-of-thought reasoning examples (600,000 of them). These are very hard to come by and very expensive to label with humans at this scale. Which is why the process to create them is the second special thing to highlight (View Highlight) - 2- An interim high-quality reasoning LLM (but worse at non-reasoning tasks).

This data is created by a precursor to R1, an unnamed sibling which specializes in reasoning. This sibling is inspired by a third model called R1-Zero (that we’ll discuss shortly). It is significant not because it’s a great LLM to use, but because creating it required so little labeled data alongside large-scale reinforcement learning resulting in a model that excels at solving reasoning problems.

The outputs of this unnamed specialist reasoning model can then be used to train a more general model that can also do other, non-reasoning tasks, to the level users expect from an LLM.

(View Highlight)

(View Highlight) - 3- Creating reasoning models with large-scale reinforcement learning (RL)

This happens in two steps:

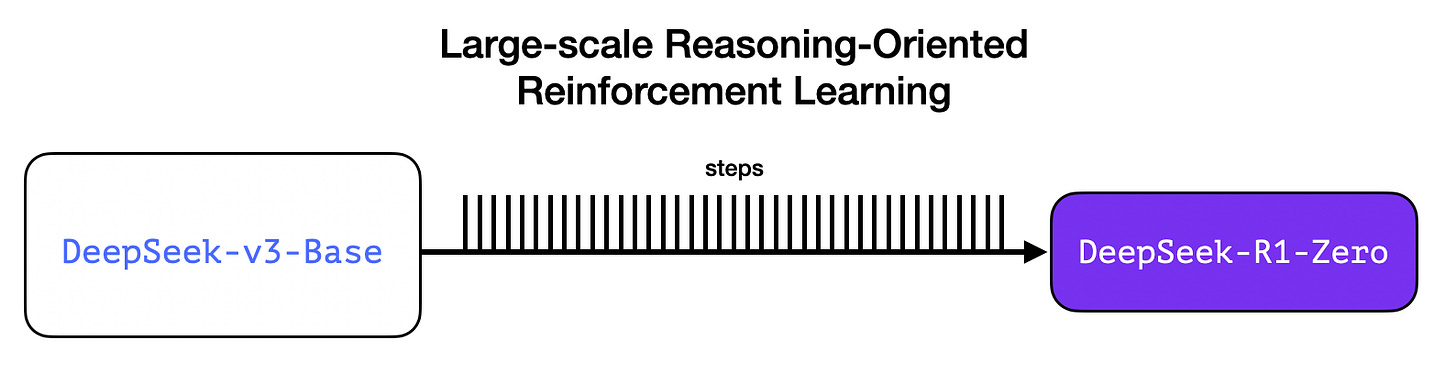

3.1 Large-Scale Reasoning-Oriented Reinforcement Learning (R1-Zero)

Here, RL is used to create the interim reasoning model. The model is then used to generate the SFT reasoning examples. But what makes creating this model possible is an earlier experiment creating an earlier model called DeepSeek-R1-Zero.

3.1 Large-Scale Reasoning-Oriented Reinforcement Learning (R1-Zero)

Here, RL is used to create the interim reasoning model. The model is then used to generate the SFT reasoning examples. But what makes creating this model possible is an earlier experiment creating an earlier model called DeepSeek-R1-Zero.

(View Highlight)

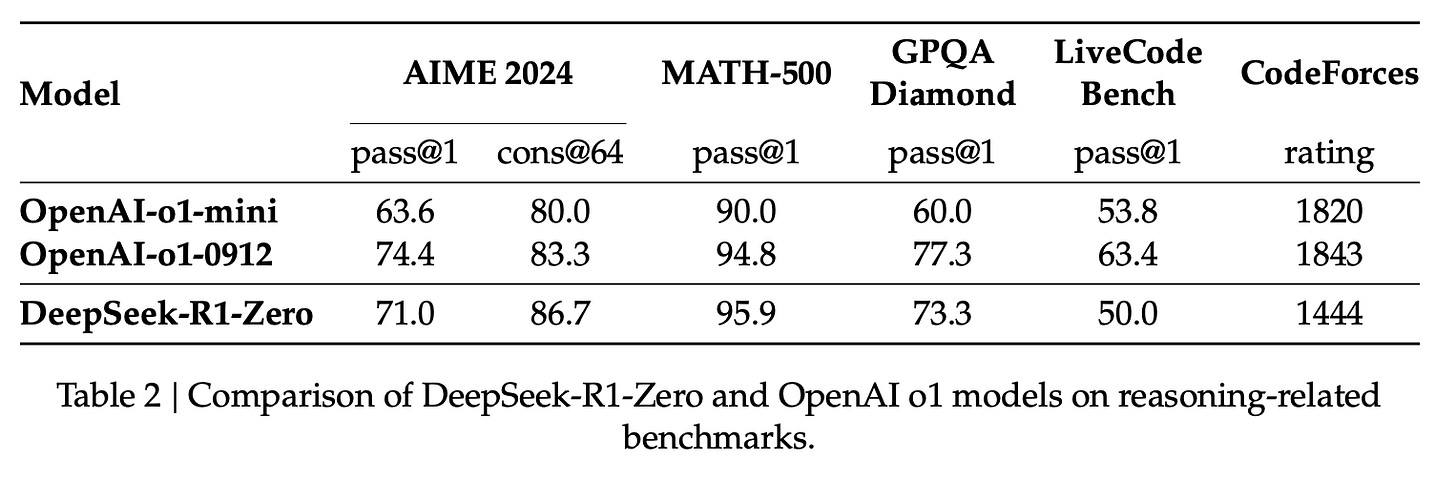

(View Highlight) - R1-Zero is special because it is able to excel at reasoning tasks without having a labeled SFT training set. Its training goes directly from a pre-trained base model through a RL training process (no SFT step). It does this so well that it’s competitive with o1.

This is significant because data has always been the fuel for ML model capability. How can this model depart from that history? This points to two things:

1- Modern base models have crossed a certain threshold of quality and capability (this base model was trained on 14.8 trillion high-quality tokens).

2- Reasoning problems, in contrast to general chat or writing requests, can be automatically verified or labeled. Let’s show this with an example. (View Highlight)

This is significant because data has always been the fuel for ML model capability. How can this model depart from that history? This points to two things:

1- Modern base models have crossed a certain threshold of quality and capability (this base model was trained on 14.8 trillion high-quality tokens).

2- Reasoning problems, in contrast to general chat or writing requests, can be automatically verified or labeled. Let’s show this with an example. (View Highlight) - Example: Automatic Verification of a Reasoning Problem

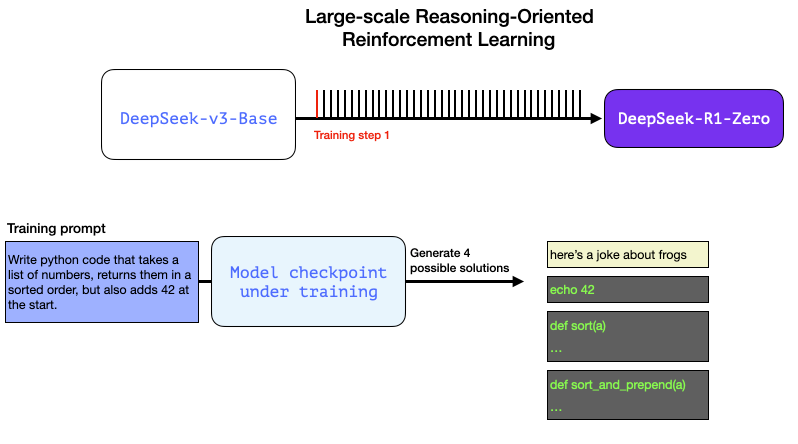

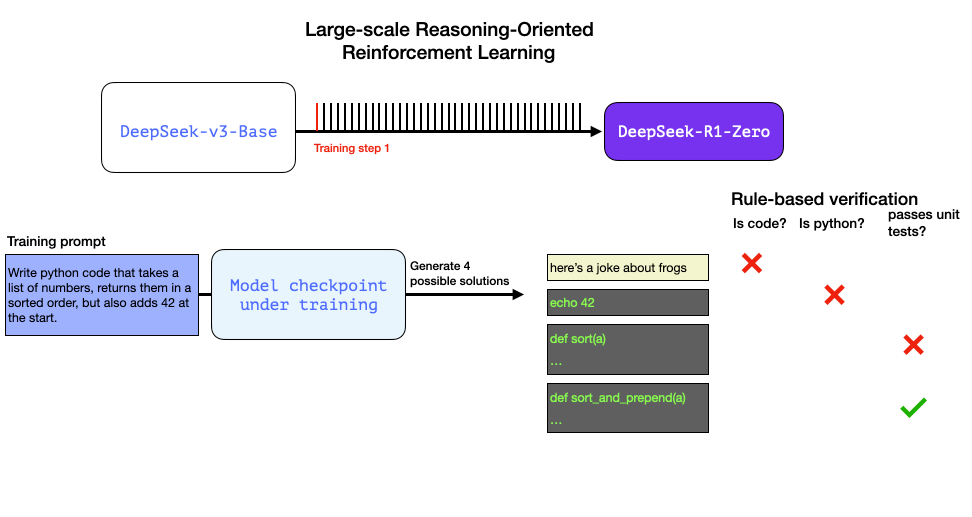

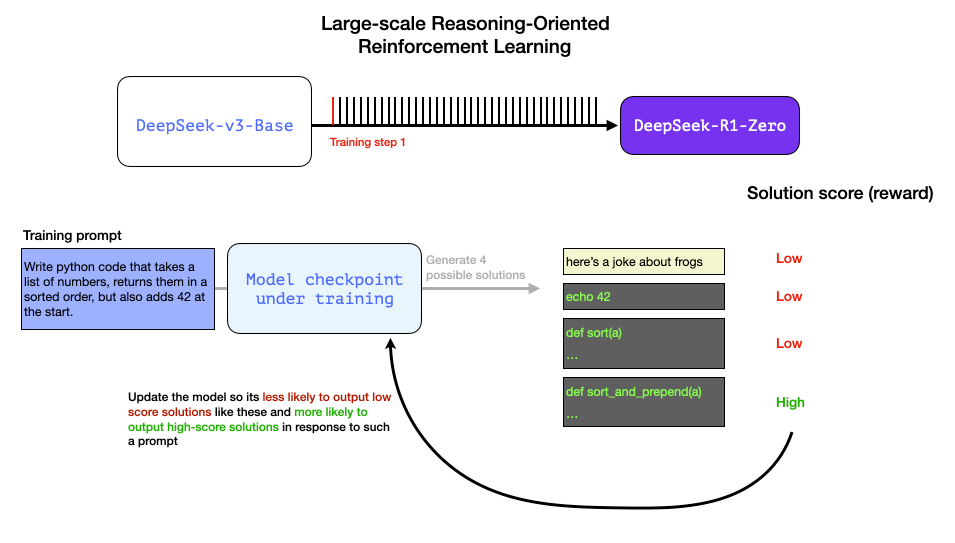

This can be a prompt/question that is a part of this RL training step:

Write python code that takes a list of numbers, returns them in a sorted order, but also adds 42 at the start. A question like this lends itself to many ways of automatic verification. Say we present this this to the model being trained, and it generates a completion: • A software linter can check if the completion is proper python code or not • We can execute the python code to see if it even runs • Other modern coding LLMs can create unit tests to verify the desired behavior (without being reasoning experts themselves). • We can go even one step further and measure execution time and make the training process prefer more performant solutions over other solutions — even if they’re correct python programs that solve the issue. We can present a question like this to the model in a training step, and generate multiple possible solutions.

(View Highlight)

(View Highlight) - We can automatically check (with no human intervention) and see that the first completion is not even code. The second one is code, but is not python code. The third is a possible solution, but fails the unit tests, and the forth is a correct solution.

These are all signals that can be directly used to improve the model. This is of course done over many examples (in mini-batches) and over successive training steps.

These are all signals that can be directly used to improve the model. This is of course done over many examples (in mini-batches) and over successive training steps.

(View Highlight)

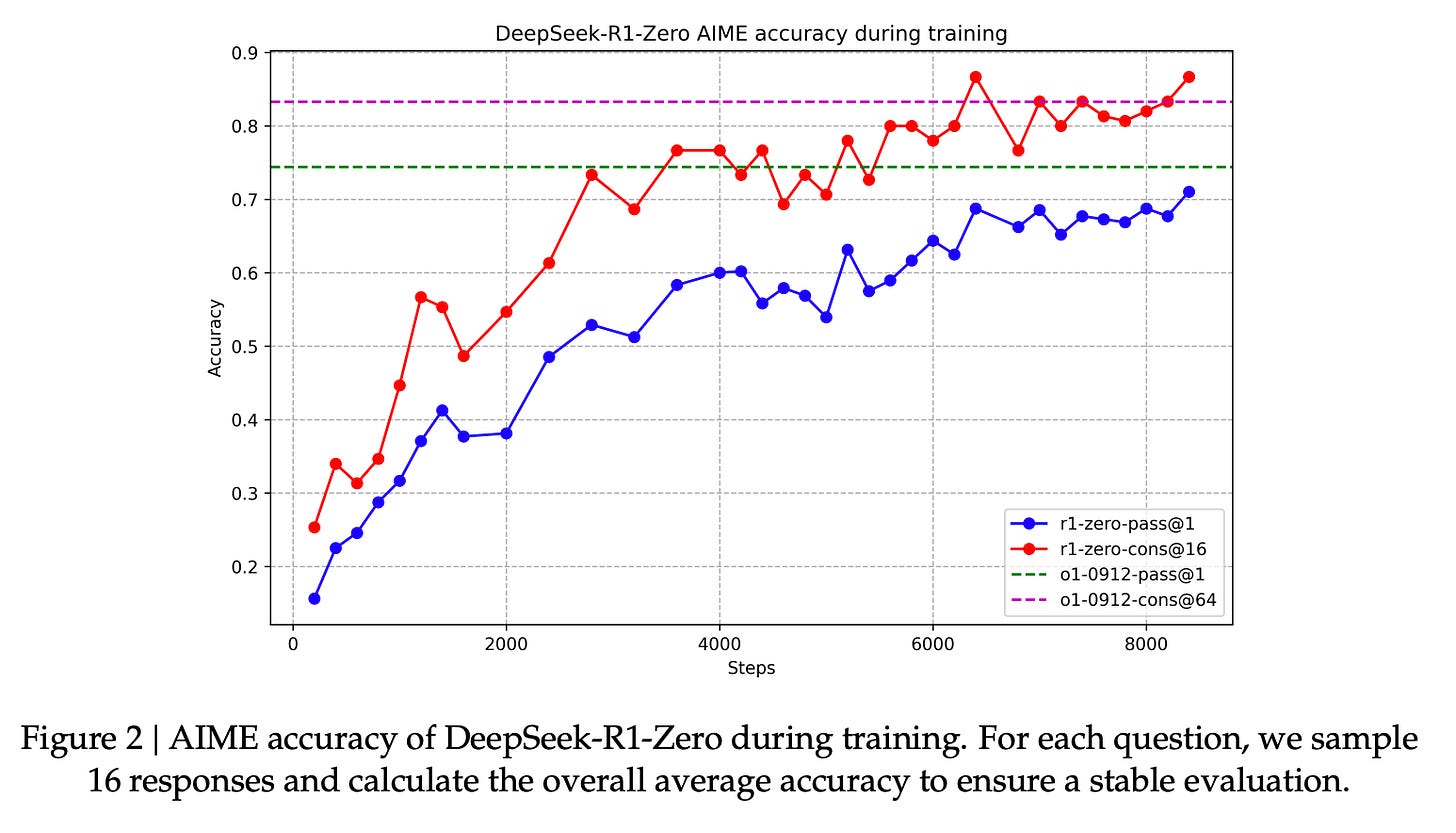

(View Highlight) - These reward signals and model updates are how the model continues improving on tasks over the RL training process as seen in Figure 2 from the paper.

Corresponding with the improvement of this capability is the length of the generated response, where the model generates more thinking tokens to process the problem.

Corresponding with the improvement of this capability is the length of the generated response, where the model generates more thinking tokens to process the problem.

(View Highlight)

(View Highlight) - This process is useful, but the R1-Zero model, despite scoring high on these reasoning problems, confronts other issues that make it less usable than desired.

Although DeepSeek-R1-Zero exhibits strong reasoning capabilities and autonomously develops unexpected and powerful reasoning behaviors, it faces several issues. For instance, DeepSeek-R1-Zero struggles with challenges like poor readability, and language mixing. (View Highlight)

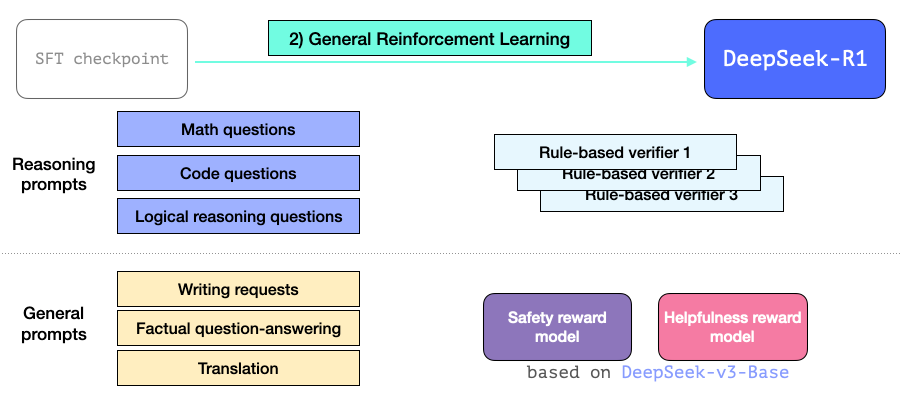

- R1 is meant to be a more usable model. So instead of relying completely on the RL process, it is used in two places as we’ve mentioned earlier in this section:

1- creating an interim reasoning model to generate SFT data points

2- Training the R1 model to improve on reasoning and non-reasoning problems (using other types of verifiers)

(View Highlight)

(View Highlight) - Creating SFT reasoning data with the interim reasoning model

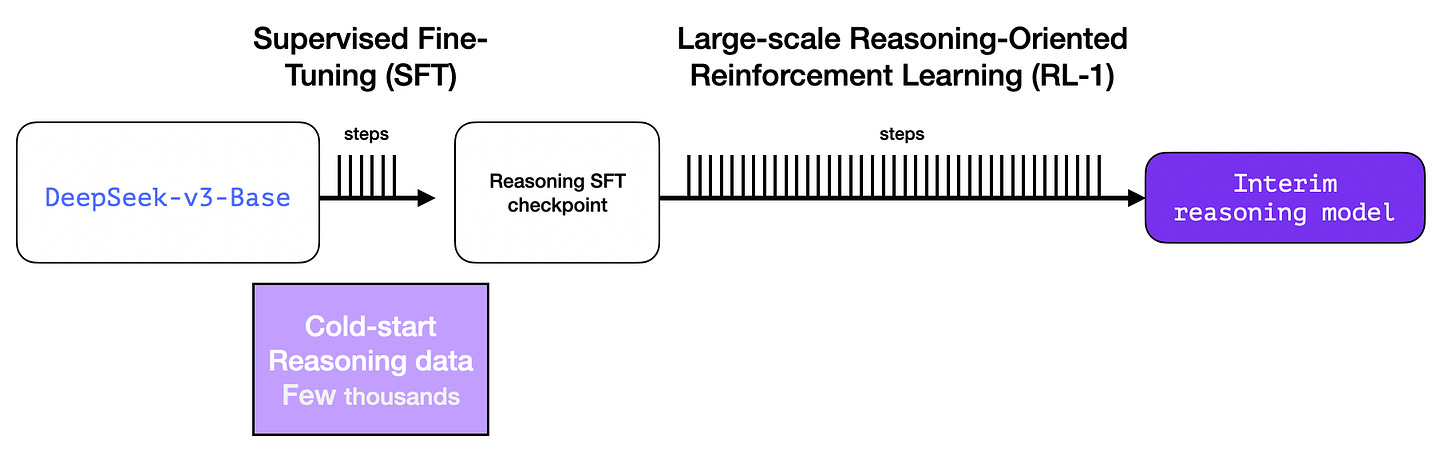

To make the interim reasoning model more useful, it goes through an supervised fine-tuning (SFT) training step on a few thousand examples of reasoning problems (some of which are generated and filtered from R1-Zero). The paper refers to this as cold start data”

2.3.1. Cold Start Unlike DeepSeek-R1-Zero, to prevent the early unstable cold start phase of RL training from the base model, for DeepSeek-R1 we construct and collect a small amount of long CoT data to fine-tune the model as the initial RL actor. To collect such data, we have explored several approaches: using few-shot prompting with a long CoT as an example, directly prompting models to generate detailed answers with reflection and verification, gathering DeepSeek-R1- Zero outputs in a readable format, and refining the results through post-processing by human annotators.

(View Highlight)

(View Highlight) - But wait, if we have this data, then why are we relying on the RL process? It’s because of the scale of the data. This dataset might be 5,000 examples (which is possible to source), but to train R1, 600,000 examples were needed. This interim model bridges that gap and allows to synthetically generate that extremely valuable data.

(View Highlight)

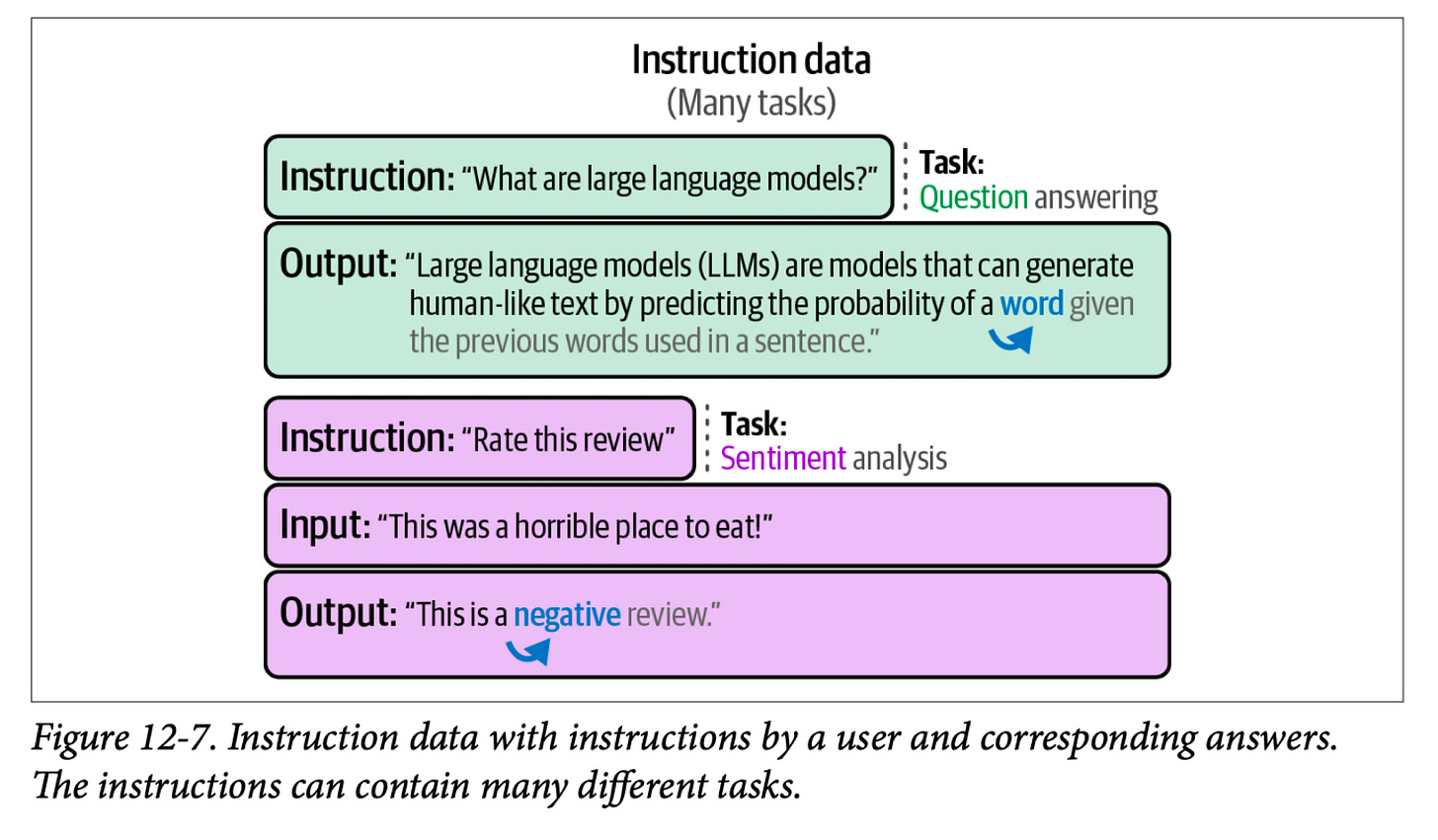

(View Highlight) - If you’re new to the concept of Supervised Fine-Tuning (SFT), that is the process that presents the model with training examples in the form of prompt and correct completion. This figure from chapter 12 shows a couple of SFT training examples:

(View Highlight)

(View Highlight) - This enables R1 to excel at reasoning as well as other non-reasoning tasks. The process is similar to the the RL process we’ve seen before. But since it extends to non-reasoning applications, it utilizes a helpfulnes and a safety reward model (not unlike the Llama models) for prompts that belong to these applications.

(View Highlight)

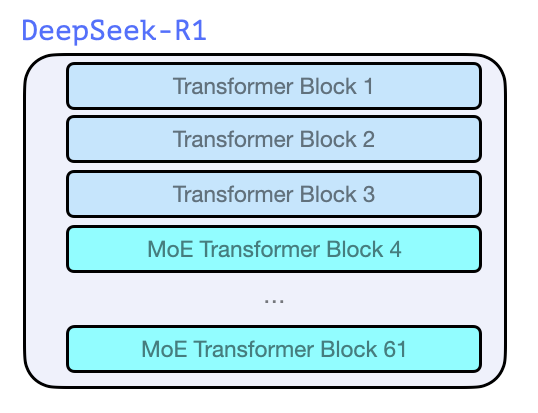

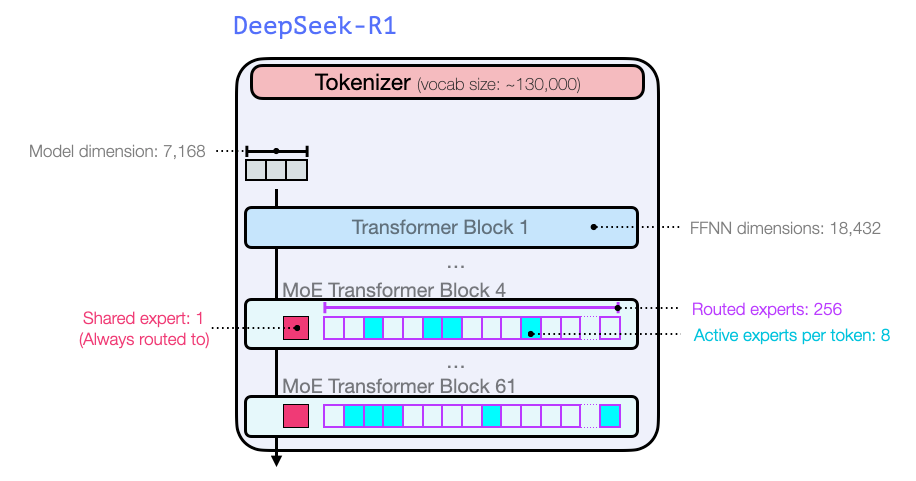

(View Highlight) - DeepSeek-R1 is a stack of Transformer decoder blocks. It’s made up 61 of them. The first three are dense, but the rest are mixture-of-experts layers (See my co-author Maarten’s incredible intro guide here: A Visual Guide to Mixture of Experts (MoE)).

In terms of model dimension size and other hyperparameters, they look like this:

In terms of model dimension size and other hyperparameters, they look like this:

(View Highlight)

(View Highlight) - With this, you should now have the main intuitions to wrap your head around the DeepSeek-R1 model. (View Highlight)