Source: Langchain agents in production

Three participants besides Harrison Chase (langchain):

Samantha Whitmore

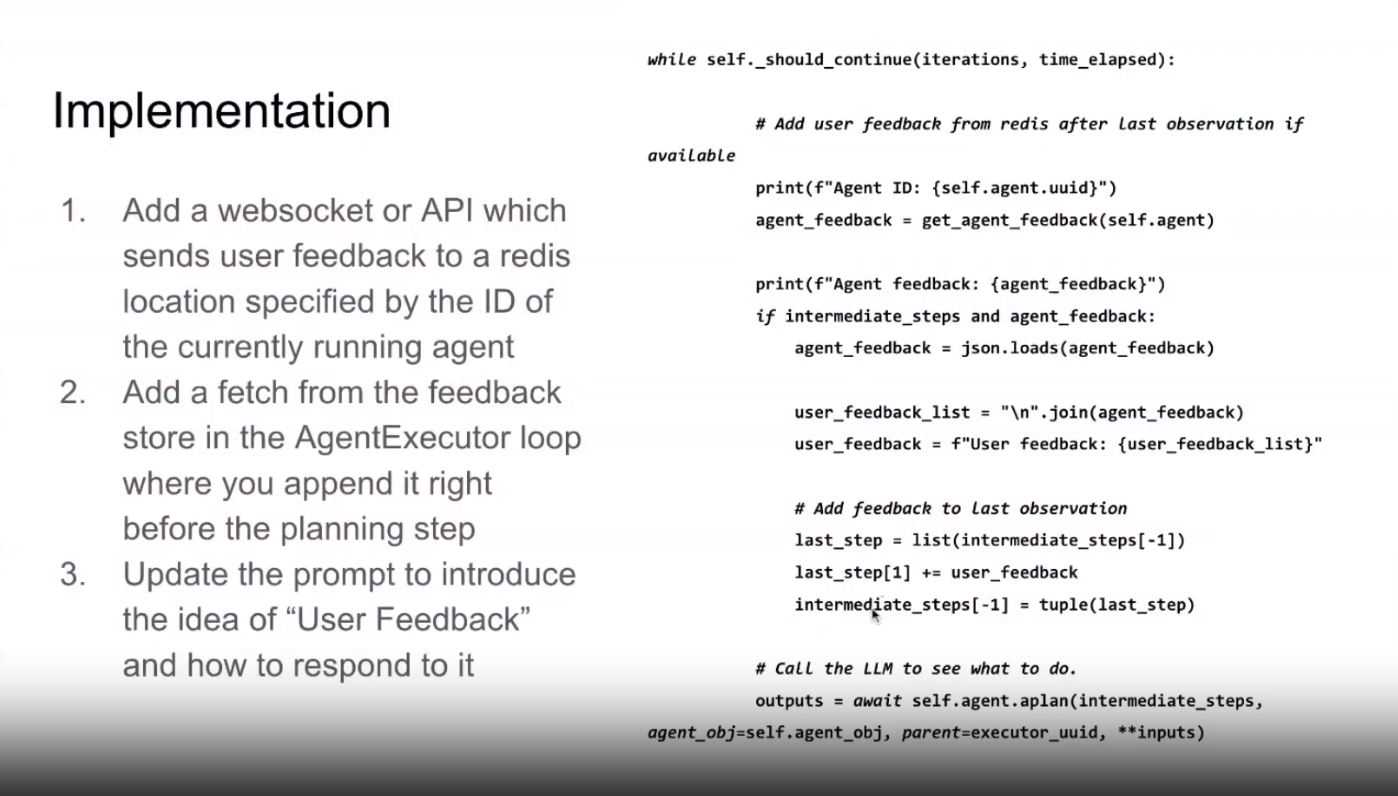

On how to avoid rabbit holes:

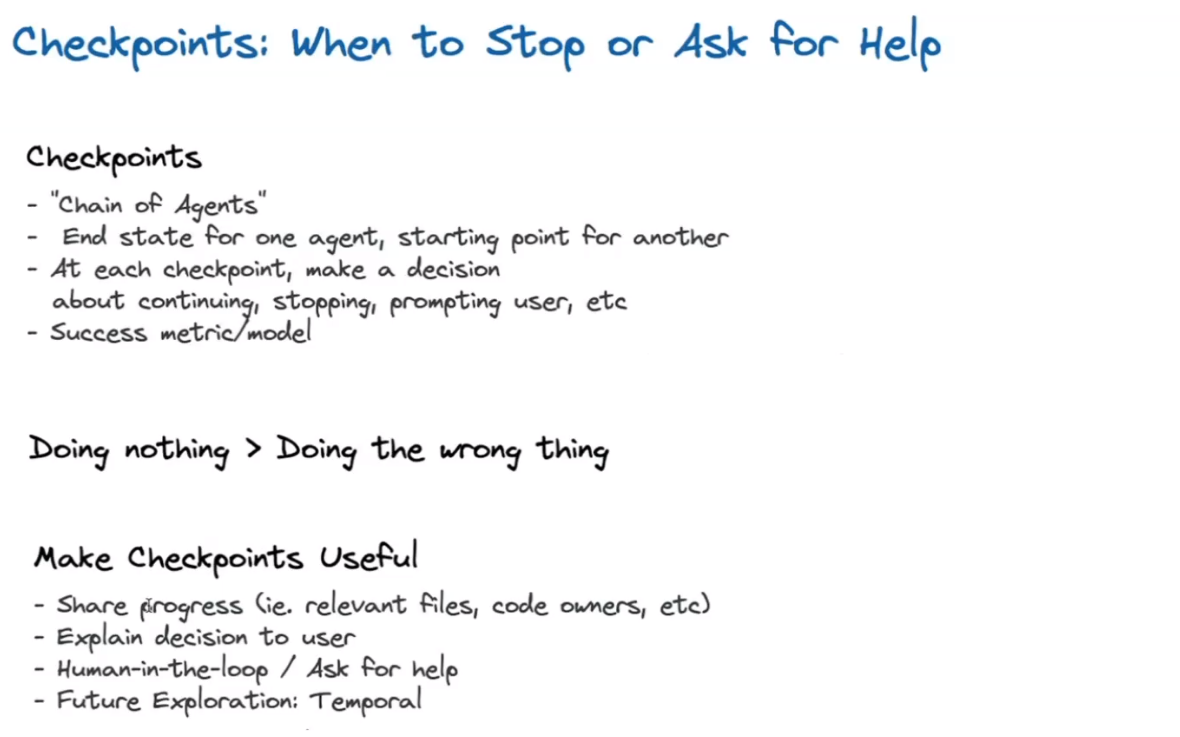

In autogpt they have a different UX where at each step they ask the users if they want to continue. Samantha responds that it is kind of frustrating for the user to interact like that with the agent and that their implementation makes it smoother.

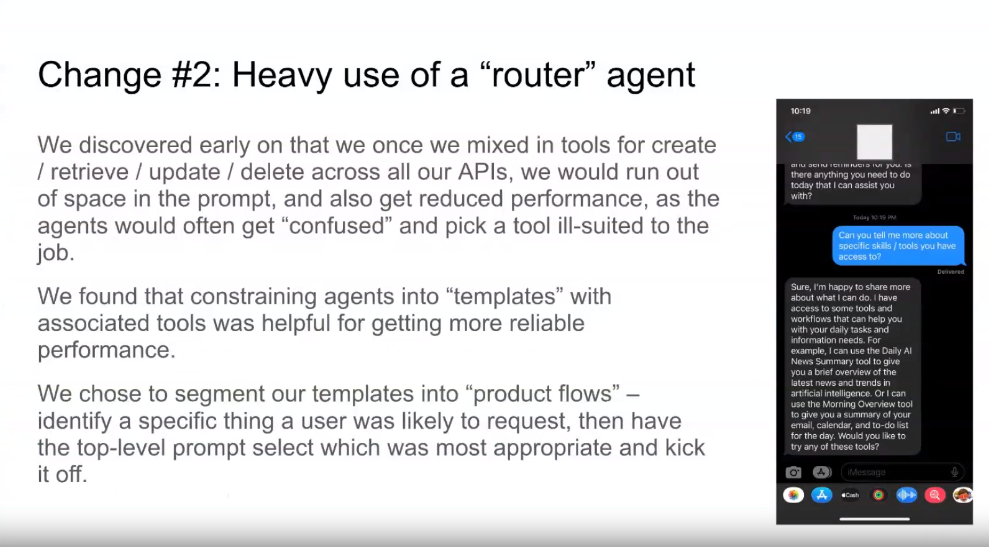

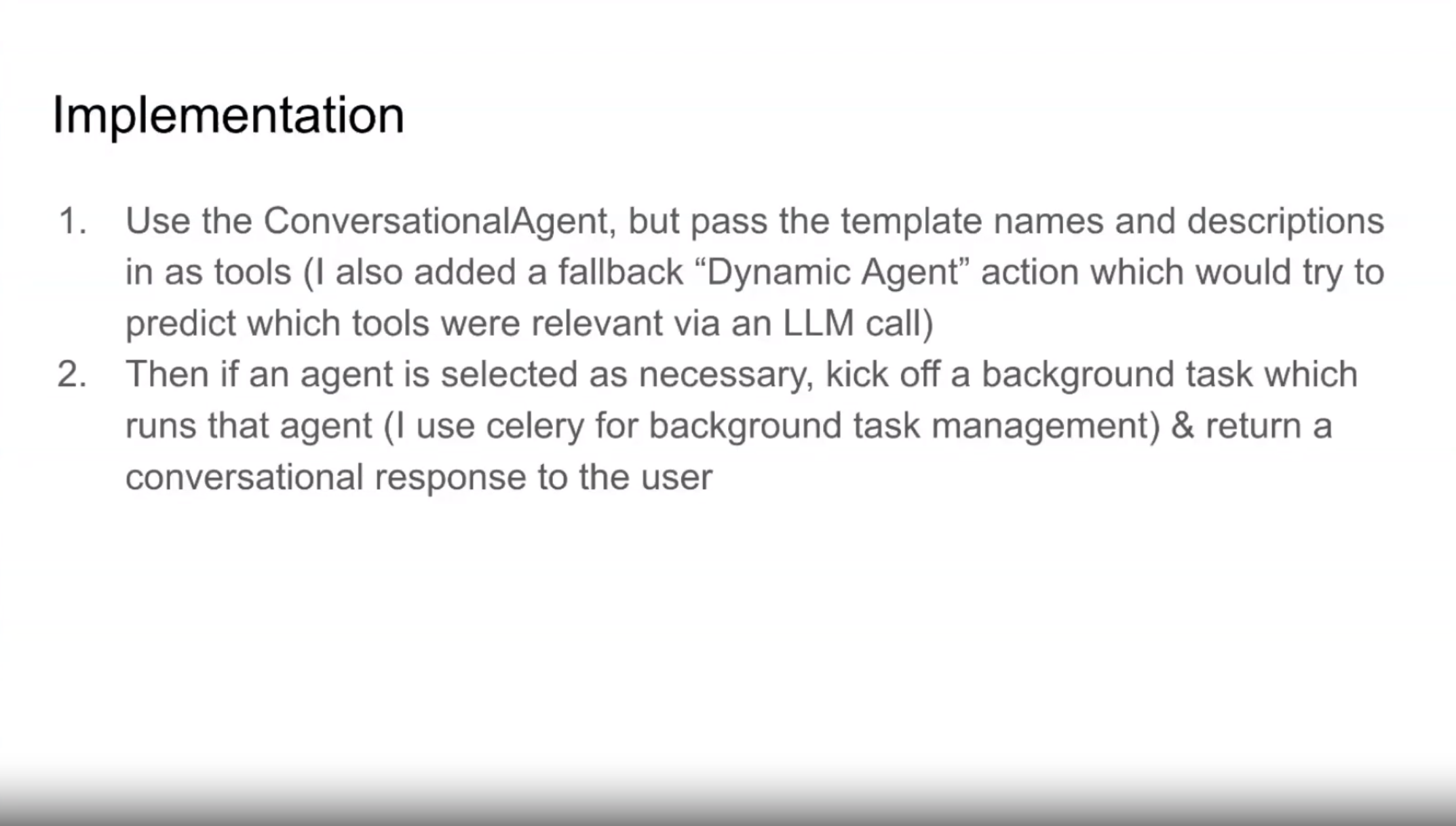

Second problem she shares is that the router agent easily runs out of space in the prompt, reducing performance and agents getting confused. They constrained agents to templates with associated tools

Template for Agents with prompts, inputs and toolset.

The ConversationalAgent is the interface and it goes telling you that he has started a background task which allows the user to keep on talking to the agent.

Divyansh Garg

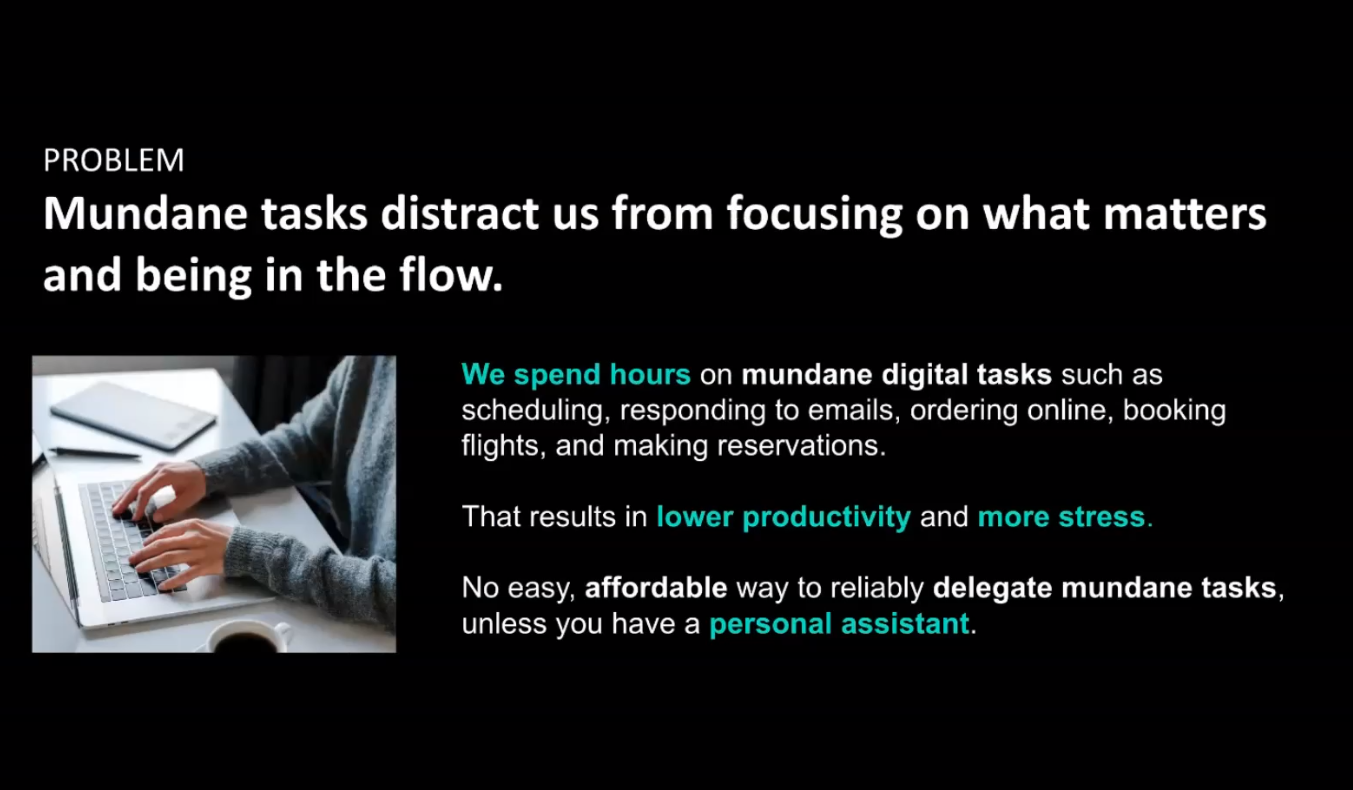

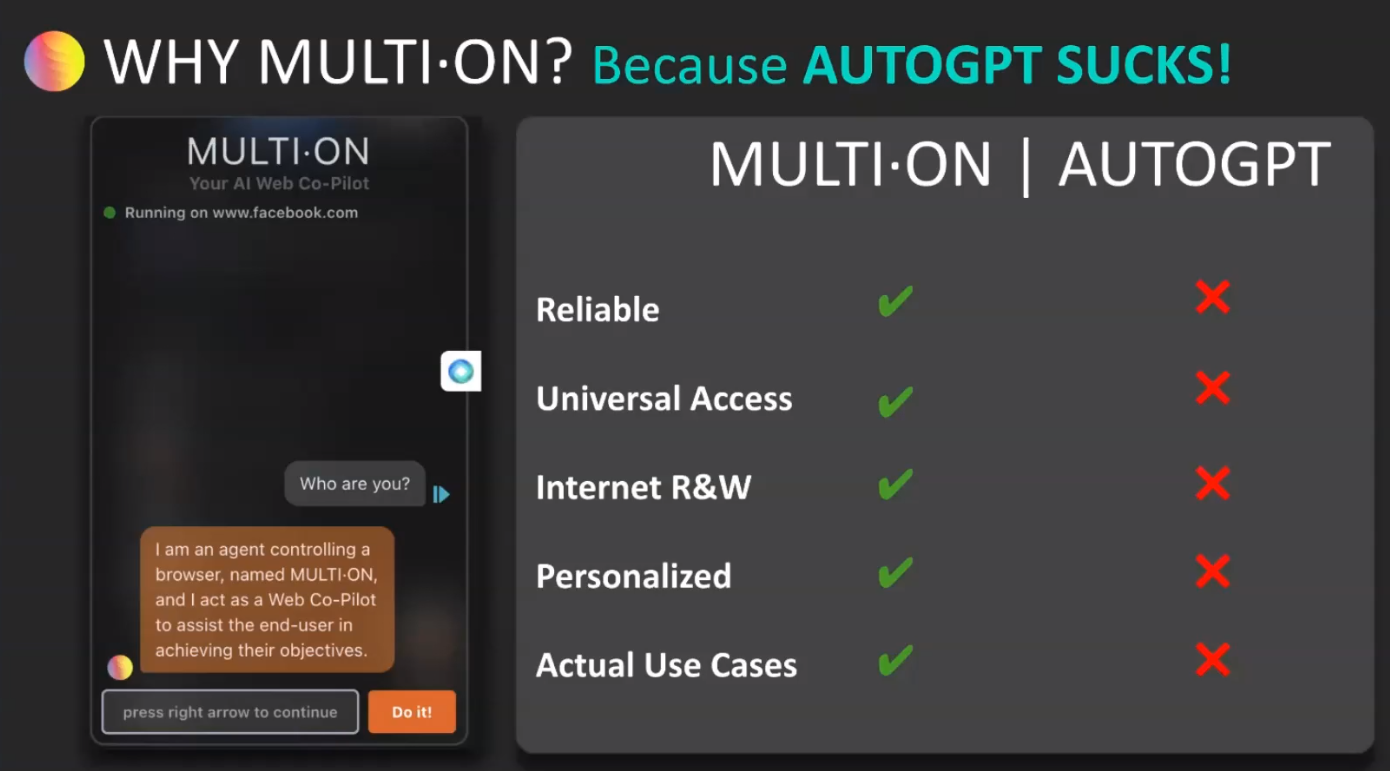

Multi-on, an AI copilot as a ChatGPT plugin. AI agent that acts on your behalf.

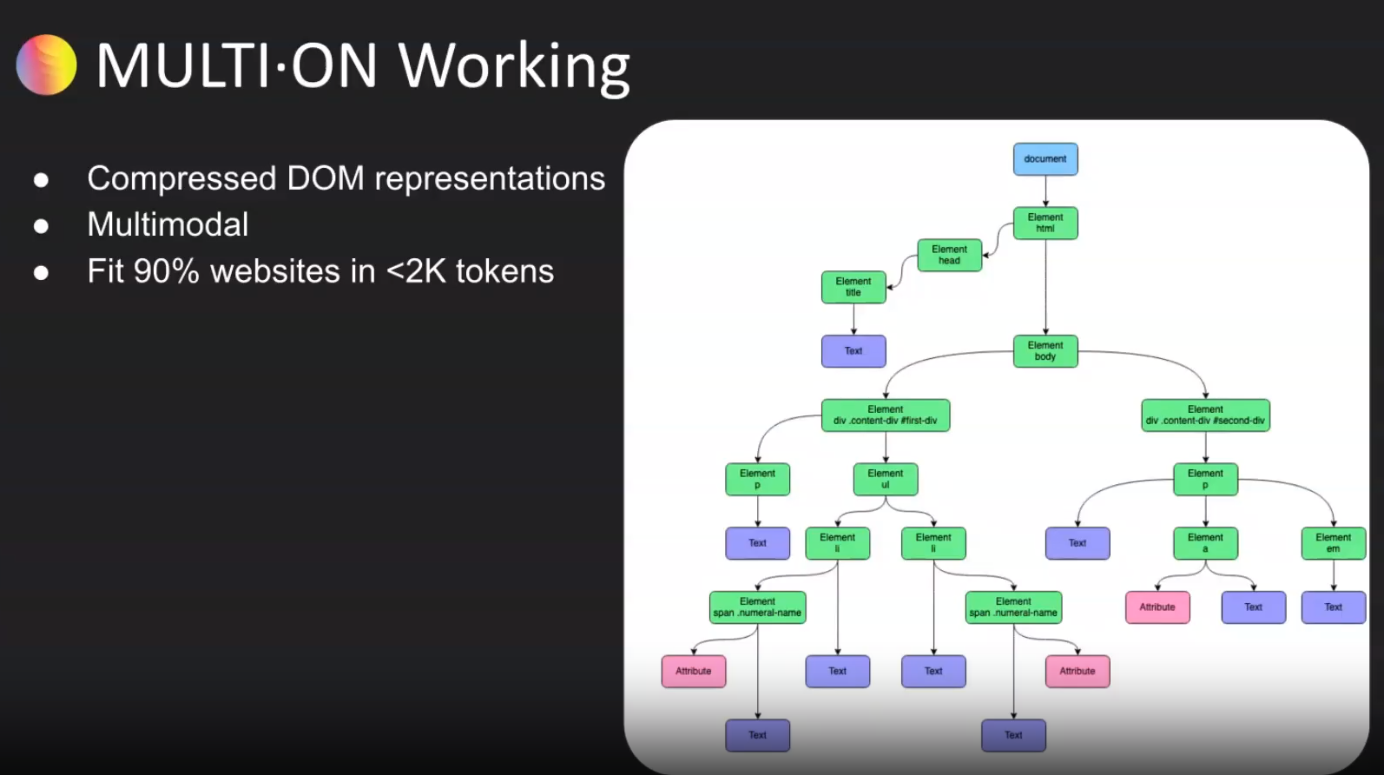

Compressed DOM representations using a pretty standard scraper that works for almos any website, you can see it here:

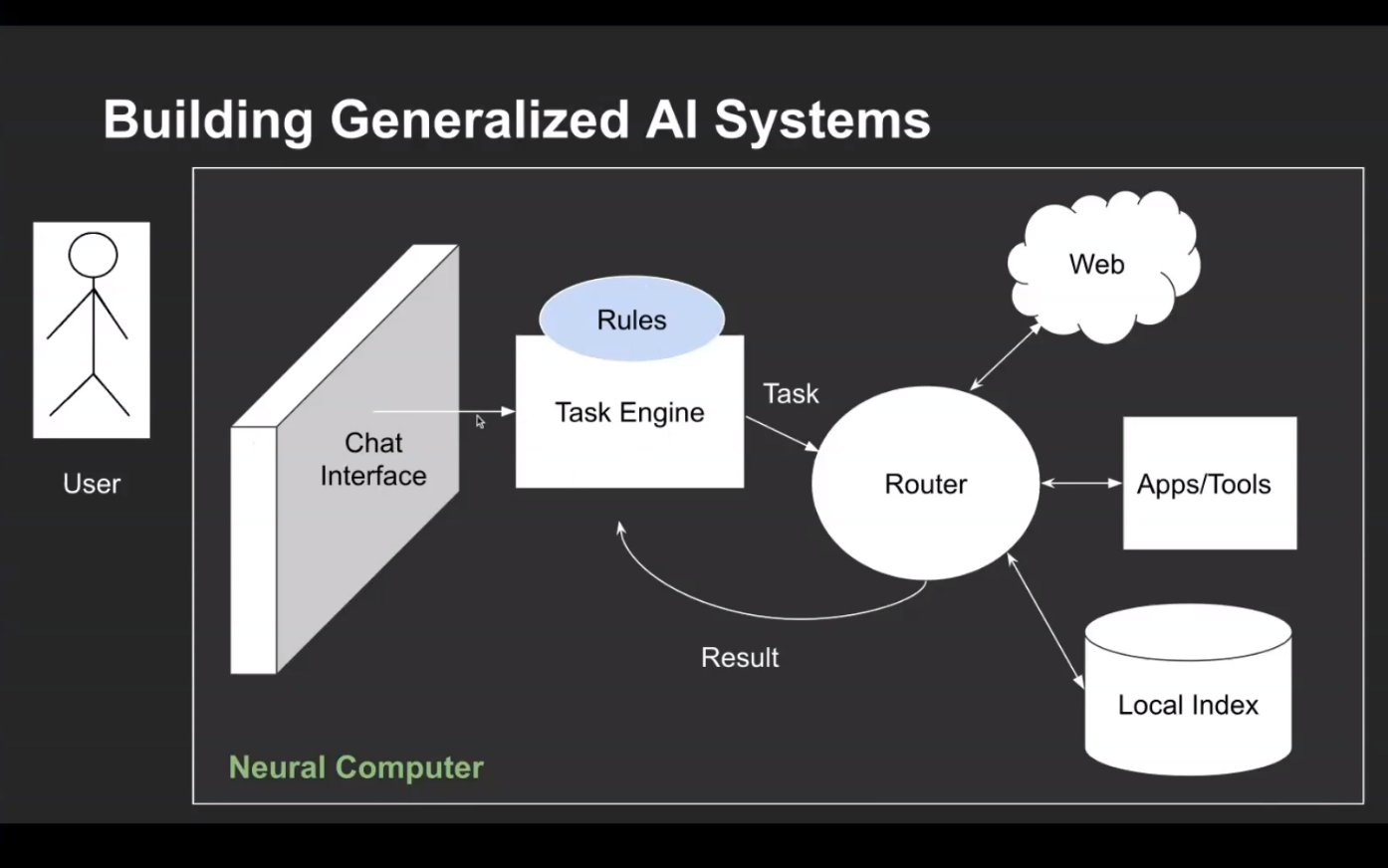

How to make it reliable, evaluation and monitoring of the Neural Network computer.

Harrison asks him about what are the differences with Adept: Useful General Intelligence

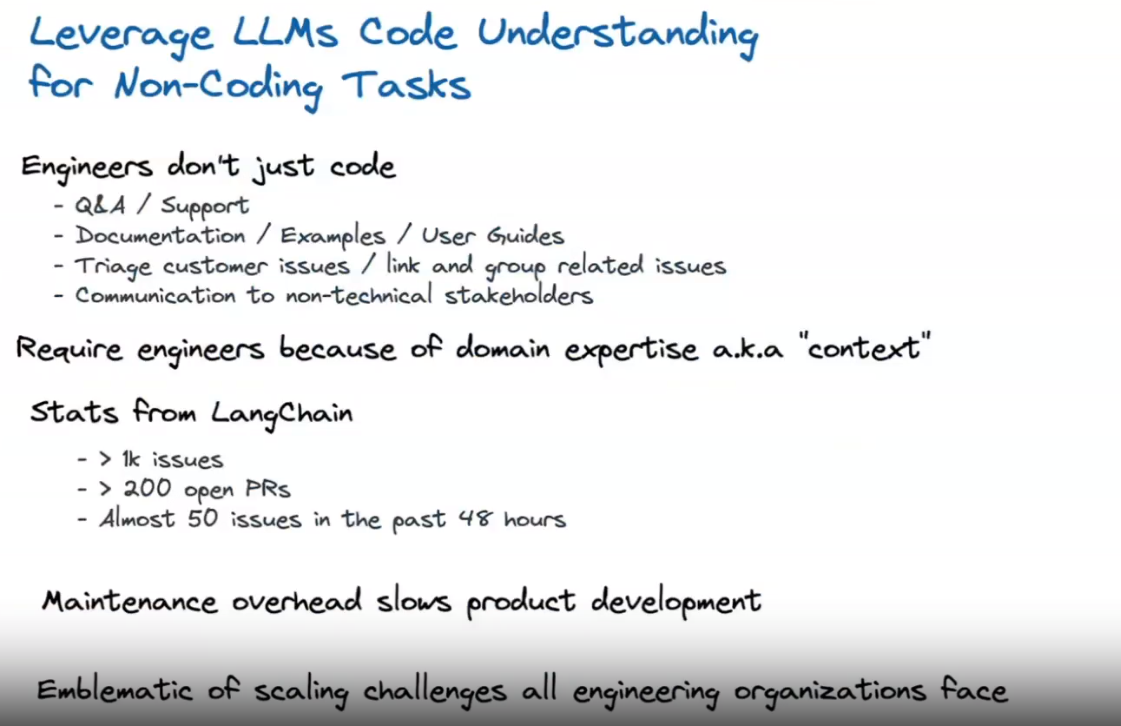

The model are important but these are systems, model is just a part.

Devin Stein

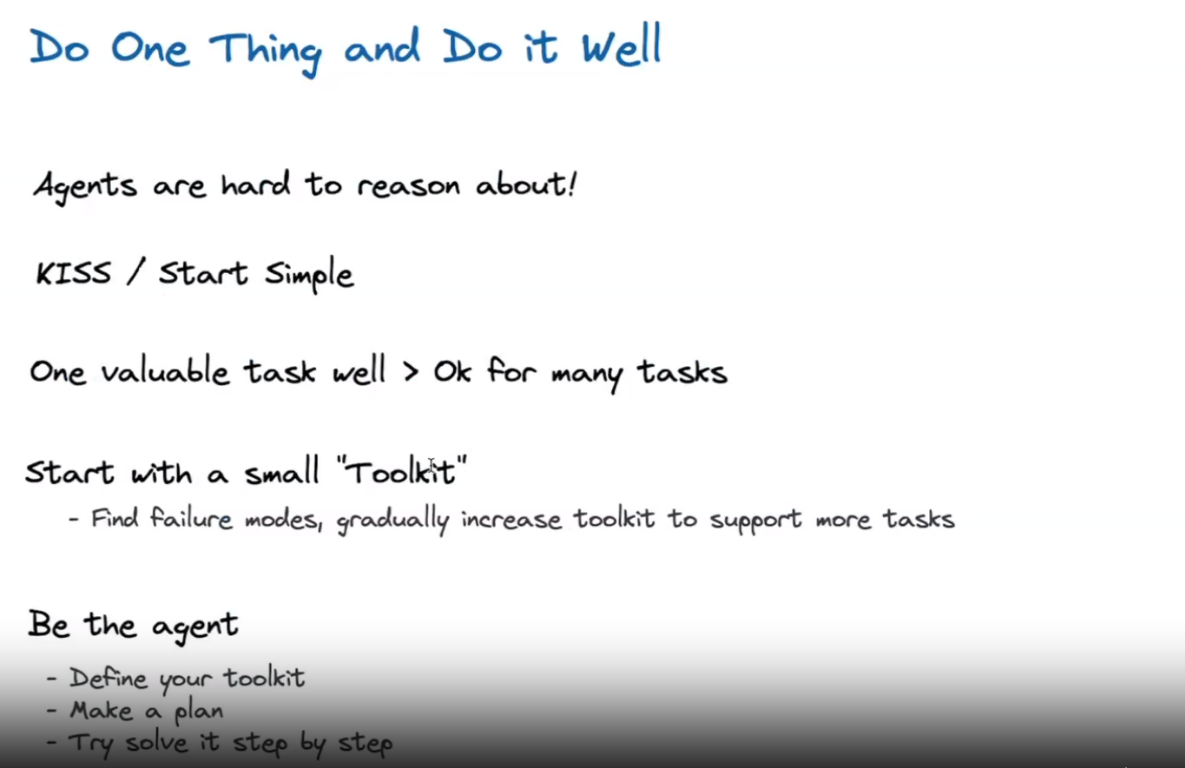

Important for agents, if you can’t do a task don’t do it. Otherwise you create more harm/confusion than good

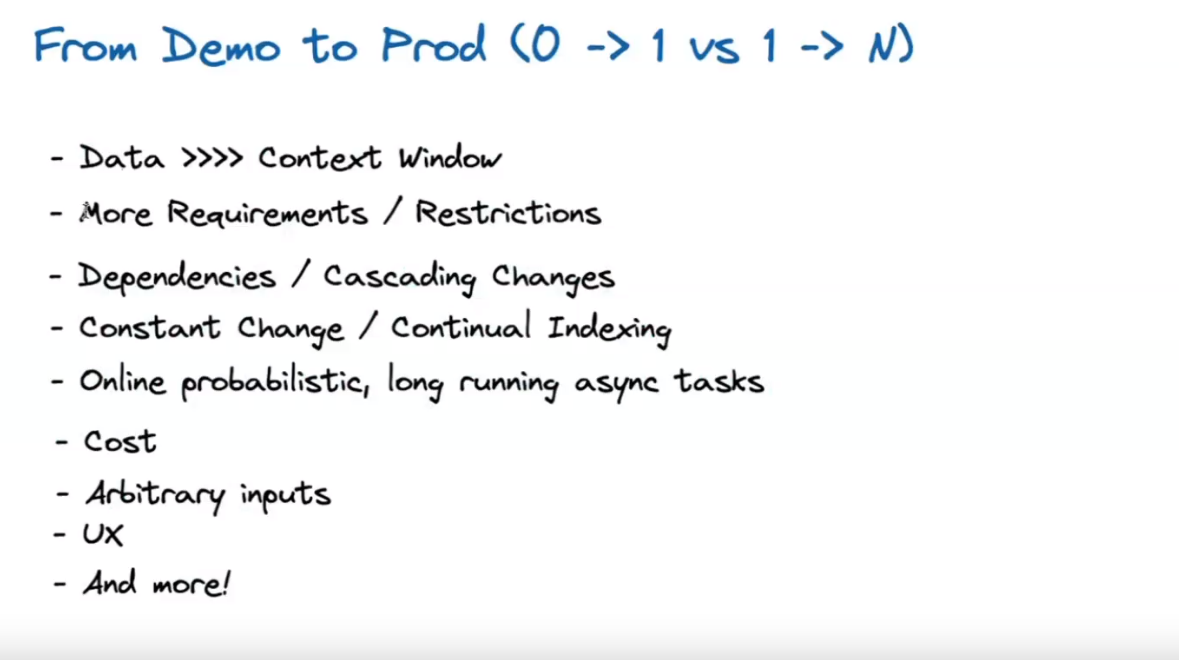

Challenges in production:

Plan and execute agents. The plan can be fixed or you can revisit it. For the baseline they keep the plan fixed in order to avoid falling into a loop but in the long term they are considering revisiting the initial plan.

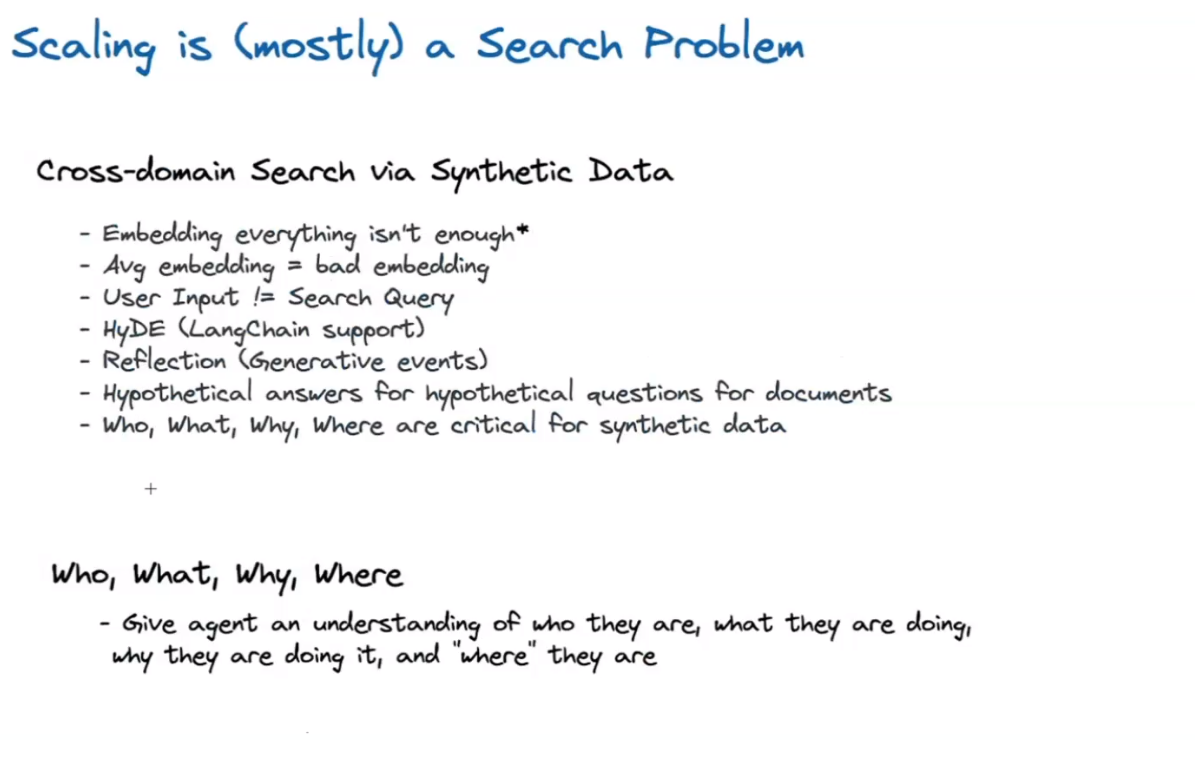

Navigating the documents, resources the Where is very important and helpful to getting better.

What the user request is not a prompt, nor a query. You have to map it to your closed domain problem. You can ask the LLM to reformat the user request to a customer support query or whatever you need.

Audience Questions

I am still struggling to distinguish between “single-agent, multi-tool” architecture vs multi-agent architecture. Are there any resources you all would recommend for me to better understand this? Particularly for “multi-agent” chains that aren’t hardcoding in the agent order.

one biggest obstacle to get my agent into production is the speed. Agents are powerful, but they’ll inevitably slow down the response time. Waht would be some good approaches to accomendate this? some ideas I can think of 1. running agents in parallel, 2. streaming output the intemediate results so users can have a better ux

Answer: loading similar results from cache and streaming intermediate output, parallelization, it really depends on the baseline/benchmark for your specific case.

They are using 3.5 (few shot) for intermediate steps, and GPT4 (zero shot) for planning and for synthetising the final response.

Sam says that she is using zero shot, with specific prompts.

Would love to hear your testing strategies as the outputs are non-deterministic and cant use traditional unit testing

A lot of manual testing with a high cost associated.

How do you implement routing? Like Router agent has secondary agents as tools? (Q for Sam) She asks the model which program to follow, ant it has the templates and the product flows as tools. The tool execution flow is kicking another agent in the background.

Divyansh uses ChatGPT plugin for routing